Far Cry 4 GPU Benchmark – Unplayable on AMD, Ubi’s Fault; GTX 980 vs. 290X, 770 | GamersNexus

Ubisoft launched all its AAA titles in one go for the holiday season, it seems. Only days after the buggy launch of Assassin’s Creed Unity ($60) – a game we found to use nearly 4GB VRAM in GPU benchmarking – the company pushed Far Cry 4 ($60) into retail channels. Ubisoft continued its partnership with nVidia into Far Cry 4, featuring inclusion of soft shadows, HBAO+, fine-tuned god rays and lighting FX, and other GameWorks-enabled technologies. Perhaps in tow of this partnership, we found AMD cards suffered substantially with Far Cry 4 on PC.

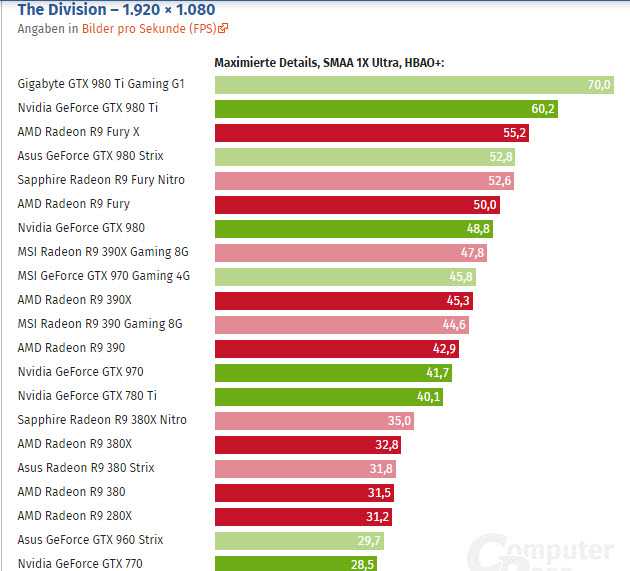

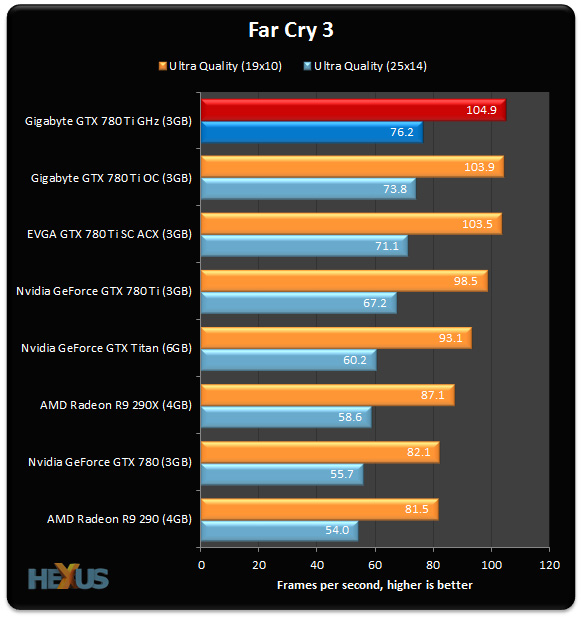

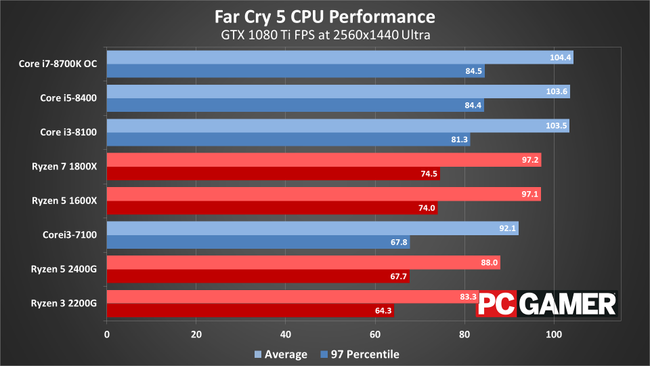

Our Far Cry 4 GPU FPS benchmark analyzes the best video cards for playing Far Cry 4 at max (Ultra) settings. We tested lower settings for optimization on more modest GPU configurations. Our tests benchmarked framerates on the GTX 980 vs. GTX 780 Ti, 770, R9 290X, 270X, 7850, and more. RAM and VRAM consumption were both monitored during playtests, with CPU bottlenecking discovered on some configurations.

Update: For those interested in playing Far Cry 4 near max settings, we just put together this PC build guide for a DIY FC4 PC.

Far Cry 4 Max Settings («NVIDIA» / Ultra) Gameplay & Benchmark

(Note: We just published our Far Cry 4 crash fix guide over here).

Far Cry 4 Graphics Technology

Unlike Assassin’s Creed Unity, Far Cry 4 ships with significantly less graphics fanfare on the PC front. The game looks great, but it’s not necessarily boasting or inventing any new technologies.

Far Cry 4 saw partnership with nVidia, creating a heavier focus on soft shadows (similar to ACU’s PCSS inclusion) and lighting effects. Soft Shadows are more GPU-intensive than other shadow settings because they are softened toward the edges, appearing more realistic as the shadow fades to natural light. Soft Shadows bleed light through the edges, the end result being less “hard” lines without transition between a cast shadow and the environment.

Other than this, god rays see heavy graphics emphasis and are optimized for nVidia devices. The god rays are implemented in a way that, when fully-enabled in the graphics settings, have tremendous atmospheric impact and are visually impressive.

AO technologies make an appearance, as always, but there’s nothing new on that front.

We also see TXAA availability for nVidia devices (up to 4x), with other AA options including MSAA 4-8x and SMAA.

Test Methodology

The above is a video overview of our benchmark course. Follow the same path to replicate our tests. The video shows Far Cry 4 at max settings (Ultra / “NVIDIA”) with soft shadows, HBAO+, and TXAA 4x at nearly 60FPS / 1080p. (Note: the video was still uploading at time of publication, but should be online shortly). Note that some of these technologies were disabled during comparative tests between AMD and NVIDIA, ensuring a more consistent benchmark.

We ran an identical 120-second circuit in Far Cry 4’s first village for each test.

For analysis of VRAM consumption, we logged GPU metrics using GPU-Z and analyzed them after the benchmarks. RAM consumption was analyzed with the native system resource monitor.

We tested Far Cry 4 on several video card configurations with a constant host platform. The Far Cry 4 FPS benchmark was conducted using several different settings within the game: a custom «NVIDIA-only» setting, a preset «Ultra» setting, and «very high,» «medium,» and «low» presets.

All tests were conducted three times for parity, each using a custom Far Cry 4 graphics benchmark course. We used FRAPS’ benchmark utility for real-time measurement of the framerate, then used FRAFS to analyze the 1% high, 1% low, min, max, and average FPS.

NVidia 344.65 stable drivers were used for all tests conducted on nVidia’s GPUs. AMD 14.11.2 beta drivers were used for the AMD cards.

| GN Test Bench 2013 | Name | Courtesy Of | Cost |

| Video Card |

(This is what we’re testing).

XFX Ghost 7850

|

GamersNexus, AMD, NVIDIA, CyberPower, ZOTAC. |

Ranges |

| CPU | Intel i5-3570k CPU Intel i7-4770K CPU (alternative bench). |

GamersNexus CyberPower |

~$220 |

| Memory | 16GB Kingston HyperX Genesis 10th Anniv. @ 2400MHz | Kingston Tech. | ~$117 |

| Motherboard | MSI Z77A-GD65 OC Board | GamersNexus | ~$160 |

| Power Supply | NZXT HALE90 V2 | NZXT | Pending |

| SSD | Kingston 240GB HyperX 3K SSD | Kingston Tech. |

~$205 |

| Optical Drive | ASUS Optical Drive | GamersNexus | ~$20 |

| Case | Phantom 820 | NZXT | ~$130 |

| CPU Cooler | Thermaltake Frio Advanced | Thermaltake | ~$65 |

The system was kept in a constant thermal environment (21C — 22C at all times) while under test. 4x4GB memory modules were kept overclocked at 2133MHz. All case fans were set to 100% speed and automated fan control settings were disabled for purposes of test consistency and thermal stability.

A 120Hz display was connected for purposes of ensuring frame throttles were a non-issue. The native resolution of the display is 1920×1080. V-Sync was completely disabled for this test.

A few additional tests were performed as one-offs to test various graphics settings for impact.

The video cards tested include:

- AMD Radeon R9 290X 4GB (provided by CyberPower).

- AMD Radeon R9 270X 2GB (we’re using reference; provided by AMD).

- AMD Radeon HD 7850 1GB (bought by GamersNexus).

- AMD Radeon R7 250X 1GB (equivalent to HD 7770; provided by AMD).

- NVidia GTX 780 Ti 3GB (provided by nVidia).

- NVidia GTX 770 2GB (we’re using reference; provided by nVidia).

- NVidia GTX 750 Ti Superclocked 2GB (provided by nVidia).

- GTX 970 4GB

- GTX 980 4GB

Far Cry 4 VRAM & RAM Consumption

We expanded upon our VRAM test from the Assassin’s Creed Unity benchmark last week, now testing VRAM consumption on various graphics presets. VRAM consumption hovered in the ~3.5GB range when using high-end GPUs capable of Ultra settings. Even at the game’s lowest settings, if VRAM was available for use, Far Cry would find ways to put it to use; we saw roughly 2GB VRAM consumed even on “low” settings, proving that larger framebuffers are rapidly becoming relevant.

As for system memory, the game uses about 1.6-1.9GB of system RAM at any given time.

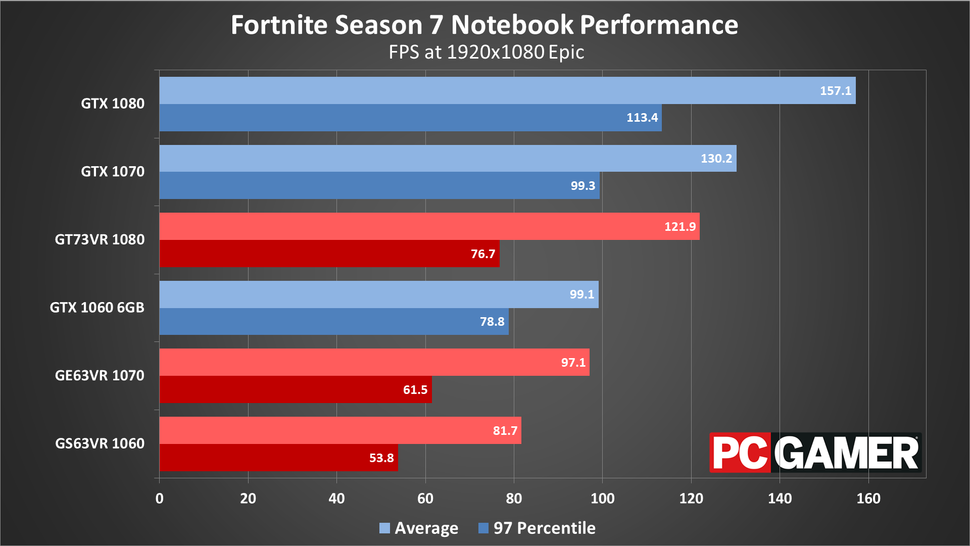

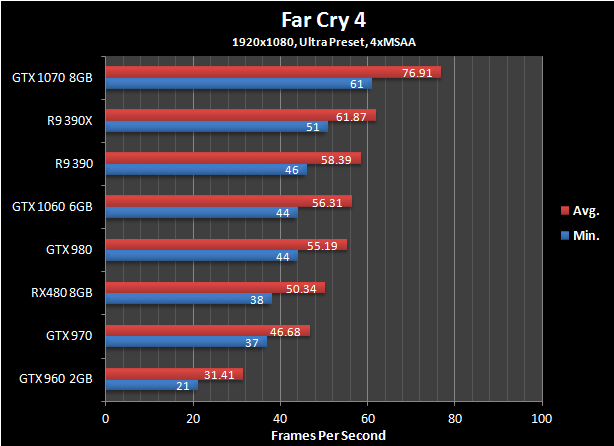

Far Cry 4 Benchmark: GTX 980 vs. GTX 780 Ti, 770, R9 290X, 270X

Below are all of the graphs showcasing performance from our Far Cry 4 graphics card benchmark. The game was tested using these settings:

- “Ultra” preset with “enhanced” god rays.

- “Very High” preset without modifications.

- “Medium” preset without modifications.

- “Low” preset without modifications.

- “NVIDIA” preset for nV GPUs only.

We noticed CPU throttling as we approached lower settings with high-end GPUs. It seems that even a GTX 980 is incapable of pushing much further than 90FPS on Low settings, despite being capable of doing so. We suspect that this is due to our 3570K. A higher-end CPU may allow higher FPS.

Looking strictly at FPS and ignoring the next section, everything from the GTX 770 upward is capable of playing Far Cry 4 at 1080p with “Ultra” settings. The GTX 970 would fall right around where the GTX 780 is on our benchmarks.

The GTX 970 would fall right around where the GTX 780 is on our benchmarks.

Game-Breaking Stuttering, Frame Drops, & Choppiness on AMD

Far Cry 4 has fairly intensive graphics, but the game performs fluidly on all nVidia hardware. The fluidity with which gameplay unfolded meant that even nVidia devices pushing 30-40FPS were still more than “playable” in both input and visual output. AMD devices suffered horribly, though. Even with a 290X on medium settings, we experienced frame stuttering and choppiness (similar to what we saw in Watch_Dogs) that was jarring enough to be considered “unplayable.” Panning the camera left-to-right showcases the stuttering and frame drops, ultimately netting a somewhat nauseating experience on AMD GPUs.

We experienced this stuttering across all tested AMD devices, including the HD 7000-series card and rebrands (250X).

Owners of AMD devices should not purchase Far Cry 4 until further patches and driver updates are issued by both the developer and AMD. We tested with 14.11.2 beta drivers and Ubisoft’s day-1 1GB patch, but know that updates are forthcoming.

We tested with 14.11.2 beta drivers and Ubisoft’s day-1 1GB patch, but know that updates are forthcoming.

Optimization is Great… for Half the Market

NVIDIA’s cards run shockingly fluidly given the somewhat average framerates. Far Cry 4 is completely playable on a 750 Ti, though you’d want to drop settings a bit (probably close to “low”) for those scenes featuring explosives and high-action. The special “NVIDIA” preset game settings create an environment that features low-hanging fog, cloud-piercing god rays, and AA / AO that make for a beautifully-rendered game.

Unfortunately, none of this matters if you’re on an AMD video card. The game is shamefully unplayable on AMD devices, to the point that I question whether Ubisoft even performed internal testing on AMD GPUs.

What’s The Best Video Card for Far Cry 4?

After our testing, it’s clear that AMD isn’t even a consideration right now – at least, not until further patches and driver updates are released. Assuming a 1920×1080 resolution, you don’t need too much in the way of video cards to run at moderate performance on very high settings. Some tweaking of settings will go a long way with Far Cry 4, allowing a GTX 760 or 770 to play the game nearly on very high / near-ultra settings with relative consistency.

Assuming a 1920×1080 resolution, you don’t need too much in the way of video cards to run at moderate performance on very high settings. Some tweaking of settings will go a long way with Far Cry 4, allowing a GTX 760 or 770 to play the game nearly on very high / near-ultra settings with relative consistency.

Given the high VRAM consumption, we’d recommend a framebuffer of 3GB or larger.

My shortlist of solutions for Far Cry 4 would be:

- GTX 970 4GB ($400 – best performance-to-value at ultra settings / 1080p).

- GTX 980 4GB 4GB ($580 – best performance for “NVIDIA” settings or 1440p).

- GTX 760 4GB ($230 – hands-down the best value for Far Cry 4 if playing at high/very high on 1080).

AMD does not make the list until updates are issued by both parties, though I suspect that Ubisoft is more at fault.

— Steve “Lelldorianx” Burke.

Nvidia GeForce GTX 970 Revisited

When the GTX 970 launched last year, the tech press — Digital Foundry included — were unanimous in their praise for Nvidia’s new hardware. Indeed, we called it «the GPU that nukes almost the entire high-end graphics card market from orbit». It beat the R9 290 and R9 290X, forcing AMD to instigate major price-cuts, while still providing the lion’s share of the performance of the much more expensive GTX 980. But recent events have taken the sheen off this remarkable product. Nvidia released inaccurate specs to the press, resulting in a class action lawsuit for «deceptive conduct».

Indeed, we called it «the GPU that nukes almost the entire high-end graphics card market from orbit». It beat the R9 290 and R9 290X, forcing AMD to instigate major price-cuts, while still providing the lion’s share of the performance of the much more expensive GTX 980. But recent events have taken the sheen off this remarkable product. Nvidia released inaccurate specs to the press, resulting in a class action lawsuit for «deceptive conduct».

Let’s quickly recap what went wrong here. Nvidia’s reviewers’ guide painted a picture of the GTX 970 as a modestly cut-down version of its more expensive sibling, the GTX 980. It’s based on the same architecture, uses the same GM204 silicon, but sees CUDA cores reduced from 2048 to 1664, while clock speeds are pared back from a maximum 1216MHz on the GTX 980 to 1178MHz on the cheaper card. Otherwise, it’s the same tech — or so we were told. Anandtech’s article goes into more depth on this, but other changes came to light some months later. The GTX 970 had 56 ROPs, not 64, while its L2 cache was 1. 75MB, not 2MB.

75MB, not 2MB.

However, the major issue concerns the onboard memory. The GTX 980 has 4GB of GDDR5 in one physical block, rated for 224GB/s. The GTX 970 has 3.5GB in one partition, operating at 196GB/s, with 512MB of much slower 28GB/s RAM in a second partition. Nvidia’s driver automatically prioritises the faster RAM, only encroaching into the slower partition if it absolutely has to. And even then, the firm says that the driver intelligently allocates resources, only shunting low priority data into the slower area of RAM.

The Asus GTX 970 Strix DC2OC

GTX 970s come in all shapes and sizes — you can’t actually buy the reference design illustrated at the top of this page, instead you need to buy a card with a customised cooler. When we reviewed the GTX 970, we had access to MSI’s custom model — an exceptional piece of kit. For this article, we took a look at the Asus equivalent — the Strix DC2OC, as seen above.

The DirectCU 2 cooler is designed for ultra-quiet operation, rated for 0dB in some gaming scenarios. Even when the card is under extreme load, fan speed is minimal — it’s a really impressive piece of kit, and while you’re at the mercy of the silicon lottery to a certain extent, you should be able to overclock the Asus board to match stock GTX 980 performance on many titles.

Even when the card is under extreme load, fan speed is minimal — it’s a really impressive piece of kit, and while you’re at the mercy of the silicon lottery to a certain extent, you should be able to overclock the Asus board to match stock GTX 980 performance on many titles.

Also interesting is that the Strix only has a singular 8-pin power input — normally, the GTX 970 requires two six-pin PCI Express power cables from your PSU while some overclocking-orientated models like MSI’s feature an eight-pin/six-pin configuration. It’s always good to have options and while the MSI card is superb, the Asus offering is a lovely piece of kit and also comes highly recommended.

Regardless of the techniques used to allocate memory, what’s clear is that, by and large, the driver is successful in managing resources. As far as we are aware, not a single review picked up on any performance issues arising from the partitioned memory. Even the most meticulous form of performance analysis — frame-time readings using FCAT (that we use for all of our tests) showed no problems. There was no reason to question the incorrect specs from Nvidia because the product performed exactly as expected, with no additional micro-stutter or other artefacts. Did we miss something? Anything? Now we know about the GTX 970’s peculiar hardware set-up, is it possible to break it?

There was no reason to question the incorrect specs from Nvidia because the product performed exactly as expected, with no additional micro-stutter or other artefacts. Did we miss something? Anything? Now we know about the GTX 970’s peculiar hardware set-up, is it possible to break it?

We’ve been testing and re-benching the GTX 970 and GTX 980 for a while now. This video sums up our experiences — we can induce stutter on the 970 that’s not found on the 980 in identical conditions. However, doing so requires extreme settings that actively intrude on the gameplay experience.

- Order the GeForce GTX 970 4GB from Amazon with free shipping

Before we went into our tests, we consulted a number of top-tier developers — including a number of architects working on their next next-gen engines, and others who’ve collaborated with Nvidia in the past. One prominent developer with a strong PC background dismissed the issue, saying that the general consensus among his team was that there was «more smoke than fire». Another contact went into a little more depth:

Another contact went into a little more depth:

«The primary consumers of VRAM are usually textures, with draw buffers (vertex, index, uniform buffers etc.) coming close behind,» The Astronauts’ graphics programmer Leszek Godlewski tells us. «I wouldn’t worry about performance degradation, at least in the short term: 3.5GB of VRAM is actually quite a lot of space, and there simply aren’t many games out there yet that can place that much data on the GPU. Even when such games arrive, Nvidia engineers will surely go out of their way to adapt their driver (like they always do with high-profile games) — you’d be surprised to see how much latency can be hidden with smart scheduling.»

The question of what ends up in the slower partition of RAM is crucial to whether the GTX 970’s curious set-up will work over the longer term. While we’re accustomed to getting better performance from faster RAM, the fact is that not all GPU use-case scenarios require anything like the massive amounts of bandwidth top-end GPUs offer.

Constant buffers or shaders (read-only, not read-modify-write like framebuffers) will happily live in slower memory, since they are small to read and shared across a wide range of cache-friendly GPU resources. Compute-heavy, but data-light tasks could also sit happily in the slower partition without causing issues. These elements should be discoverable by Nvidia’s driver, and could automatically sit in the smaller partition. On the flipside, we wouldn’t like to see what would happen should a game using virtual texturing — like Rage of Wolfenstein — split assets between slow and fast RAM. It wouldn’t be pretty. As it happens though, Wolfenstein worked just fine maxed out at 1440p during our testing.

Nvidia speaks

Nvidia has faced problems on two fronts since the GTX 970 specs became a hot topic. Firstly, there’s the question of performance and to what extent the RAM partition in particular is an issue. We’ve had to go to extreme lengths to demonstrate differences in performance that could be down to the memory set-up, but unless you’re considering SLI and you’re especially fond of MSAA on the most modern titles, frame-rates and frame-times in most scenarios should be absolutely fine.

The second issue is about trust in the company — and more specifically how a firm with as many smart people as Nvidia could have miscommunicated the GTX 970 specs. Addressing the issue, Nvidia CEO Jen-Hsun Huang (pictured above) posted this message on the company blog.

Hey everyone,

Some of you are disappointed that we didn’t clearly describe the segmented memory of GeForce GTX 970 when we launched it. I can see why, so let me address it.

We invented a new memory architecture in Maxwell. This new capability was created so that reduced-configurations of Maxwell can have a larger framebuffer — i.e., so that GTX 970 is not limited to 3GB, and can have an additional 1GB.

GTX 970 is a 4GB card. However, the upper 512MB of the additional 1GB is segmented and has reduced bandwidth. This is a good design because we were able to add an additional 1GB for GTX 970 and our software engineers can keep less frequently used data in the 512MB segment.

Unfortunately, we failed to communicate this internally to our marketing team, and externally to reviewers at launch.

Since then, Jonah Alben, our senior vice president of hardware engineering, provided a technical description of the design, which was captured well by several editors. Here’s one example from The Tech Report.

Instead of being excited that we invented a way to increase memory of the GTX 970 from 3GB to 4GB, some were disappointed that we didn’t better describe the segmented nature of the architecture for that last 1GB of memory.

This is understandable. But, let me be clear: Our only intention was to create the best GPU for you. We wanted GTX 970 to have 4GB of memory, as games are using more memory than ever.

The 4GB of memory on GTX 970 is used and useful to achieve the performance you are enjoying. And as ever, our engineers will continue to enhance game performance that you can regularly download using GeForce Experience.

This new feature of Maxwell should have been clearly detailed from the beginning.

We won’t let this happen again. We’ll do a better job next time.

Jen-Hsun

Evolve’s VRAM usage (at the top of the screen) compared on GTX 970, 980 and Titan. Visually, the game looks identical on each system, but the large variance in VRAM allocation between the 970 and 980 in particular is certainly curious. Click on the image above for a closer look at the comparison.

Going into our testing, we checked out a lot of the comments posted to various forums, discussing games where GTX 970 apparently has trouble coping. Games like Watch Dogs and Far Cry 4 are often mentioned as exhibiting stutter — in our tests, they do so whether you’re running GTX 970, GTX 980 or even the 6GB Titan. To this day, Watch Dogs still hasn’t been fixed, while the only path to stutter-free Far Cry 4 gameplay is to disable the highest quality texture mip-maps via the .ini file. Call of Duty Advanced Warfare and Ryse also check out — they do use a lot of VRAM, but mostly as a texture cache. As a result, these games look just as good on a 2GB card as they do on a 3GB card — there’s just more background streaming going on when there’s less VRAM available.

Call of Duty Advanced Warfare and Ryse also check out — they do use a lot of VRAM, but mostly as a texture cache. As a result, these games look just as good on a 2GB card as they do on a 3GB card — there’s just more background streaming going on when there’s less VRAM available.

Actually breaking 3.5GB of RAM on most current titles is rather challenging in itself. Doing so involves using multi-sampling anti-aliasing, downsampling from higher resolutions or even both. On advanced rendering engines, both are a surefire way of bringing your GPU to its knees. Traditional MSAA is sometimes shoe-horned into recent titles, but even 2x MSAA can see a good 20-30 per cent hit to frame-rates — modern game engines based on deferred shading aren’t really compatible with MSAA, to the point where many games now don’t support it at all while others struggle. Take Far Cry 4, for example. During our Face-Off, we ramped up MSAA in order to show the PC version at its best. What we discovered was that foliage aliasing was far worse than the console versions (a state of affairs that persisted using Nvidia’s proprietary TXAA) and the best results actually came from post-process SMAA, which barely impacts frame-rate at all — unlike the multi-sampling alternatives.

Looking to another title supporting MSAA — Assassin’s Creed Unity — the table below illustrates starkly why multi-sampling is on the way out in favour of post-process anti-aliasing alternatives. Here, we’re using GTX Titan to measure memory consumption and performance, the idea being to measure VRAM utilisation in an environment where GPU memory is effectively limitless — only it isn’t. Even at 1080p, ACU hits 4.6GB of memory utilisation at 8x MSAA, while the same settings at 1440p actually see the Titan’s prodigious VRAM allocation totally tapped out. The performance figures speak for themselves — at 1440p, only post-process anti-aliasing provides playable frame-rates, but even then, performance can drop as low as 20fps in our benchmark sample. In contrast, a recent presentation on Far Cry 4’s excellent HRAA technique — which combines a number of AA techniques including SMAA and temporal super-sampling — provides stunning results with just 1.65ms of total rendering time at 1080p.

An illustration of how much GPU resources are sucked up by MSAA. As it is, only FXAA keeps ACU frame-rates mostly above the 30fps threshold and our contention is that GPU resources are better spent on tasks other than multi-sampling. In the case of this title, it’s only a shame that Ubisoft only supported FXAA as a post-process alternative.

| AC Unity/Ultra High/GTX Titan | FXAA | 2x MSAA | 4x MSAA | 8x MSAA |

|---|---|---|---|---|

| 1080p: VRAM Utilisation | 3517MB | 3691MB | 4065MB | 4660MB |

| 1080p Min FPS | 28.0 | 24.7 | 20.0 | 12.9 |

| 1080p Avg FPS | 46.1 | 40.2 | 33.6 | 21.2 |

| 1440p: VRAM Utilisation | 3977MB | 4343MB | 4929MB | 6069MB |

| 1440p Min FPS | 20. 0 0 |

16.0 | 12.9 | 7.5 |

| 1440p Avg FPS | 30.3 | 25.6 | 21.5 | 13.0 |

To actually get game-breaking stutter that does show a clear difference between the GTX 970 and the higher-end GTX 980 required extraordinary measures on our part. We ran two cards in SLI — in order to remove the compute bottleneck as much as possible — then we ran Assassin’s Creed Unity on ultra high settings at 1440p, with 4x MSAA. As you can see in the video at the top of this page, this produces very noticeable stutter that isn’t as pronounced on the GTX 980. But we’re really pushing matters here, effectively hobbling our frame-rate for a relatively small image quality boost. Post-process FXAA gives you something close to a locked 1440p60 presentation on this game in high-end SLI configurations — and it looks sensational.

The testing also revealed much lower memory utilisation than the Titan, suggesting that the game’s resource management system adjusts what assets are loaded into memory according to the amount of VRAM you have. Based on the Titan figures, 2x MSAA should have maxed out both GTX 970 and GTX 980 VRAM, yet curiously it didn’t. Only pushing on to 4x MSAA caused problems.

Based on the Titan figures, 2x MSAA should have maxed out both GTX 970 and GTX 980 VRAM, yet curiously it didn’t. Only pushing on to 4x MSAA caused problems.

Finding intrusive stutter elsewhere was equally difficult, but we managed it — albeit with extreme settings that neither we — nor the developer of the game in question — would recommend. Running Shadows of Mordor at ultra settings at 1440p, while downscaling from an internal 4K resolution with ultra textures engaged, showed a clear difference between the GTX 970 and the GTX 980 — something which must be caused by the different memory set-ups. To be honest, this produced a sub-optimal experience on both cards, but there were areas that saw noticeable stutter on the 970 that were far less of an issue on the 980. But the fact is that the developer doesn’t recommend ultra textures on anything other than a 6GB card — at 1080p no less. Dropping down to the recommended high level textures eliminates the stutter and produces a decent experience.

PlayStation 4

Ultra Textures

High Textures

Medium TexturesShadow of Mordor is a key title that highlights the VRAM issue. To get console equivalent visuals — in this case high quality textures — you need at least 3GB of RAM. Right now, 2GB of memory on your GPU is just fine for 1080p gaming on most other titles we’ve tested. However, we expect that situation to change this year.

Ultra Textures

High Textures

Medium TexturesShadow of Mordor is a key title that highlights the VRAM issue. To get console equivalent visuals — in this case high quality textures — you need at least 3GB of RAM. Right now, 2GB of memory on your GPU is just fine for 1080p gaming on most other titles we’ve tested. However, we expect that situation to change this year.

Ultra Textures

High Textures

Medium TexturesShadow of Mordor is a key title that highlights the VRAM issue. To get console equivalent visuals — in this case high quality textures — you need at least 3GB of RAM. Right now, 2GB of memory on your GPU is just fine for 1080p gaming on most other titles we’ve tested. However, we expect that situation to change this year.

However, we expect that situation to change this year.

Ultra Textures

High Textures

Medium TexturesShadow of Mordor is a key title that highlights the VRAM issue. To get console equivalent visuals — in this case high quality textures — you need at least 3GB of RAM. Right now, 2GB of memory on your GPU is just fine for 1080p gaming on most other titles we’ve tested. However, we expect that situation to change this year.

Please enable JavaScript to use our comparison tools.

In conclusion, we went out of our way to break the GTX 970 and couldn’t do so in a single card configuration without hitting compute or bandwidth limitations that hobble gaming performance to unplayable levels. We didn’t notice any stutter in any of our more reasonable gaming tests that we didn’t also see on the GTX 980, though any artefacts of this kind may not be quite as noticeable on the higher-end card — simply because it’s faster. In short, we stand by our original review, and believe that the GTX 970 remains the best buy in the £250 category — in the here and now, at least. The only question is whether games will come along that break the 3.5GB barrier — and then to what extent Nvidia’s drivers hold up in ensuring the slower VRAM partition is used effectively. Certainly, Nvidia’s drivers team is a force to be reckoned with. One contact tells us that their game optimisation efforts include swapping out computationally expensive shaders with hand-written replacements, boosting performance at the expense of the ever-increasing driver download size. When there’s that level of effort put into the drivers, it’s not an amazing stretch of the imagination to see that major titles at least get the attention they deserve on GTX 970.

The only question is whether games will come along that break the 3.5GB barrier — and then to what extent Nvidia’s drivers hold up in ensuring the slower VRAM partition is used effectively. Certainly, Nvidia’s drivers team is a force to be reckoned with. One contact tells us that their game optimisation efforts include swapping out computationally expensive shaders with hand-written replacements, boosting performance at the expense of the ever-increasing driver download size. When there’s that level of effort put into the drivers, it’s not an amazing stretch of the imagination to see that major titles at least get the attention they deserve on GTX 970.

The future: how much VRAM do you need?

Nvidia has used partitioned VRAM before on a number of graphics cards, going all the way back to the GTX 550 Ti, but there’s never been quite so much concern about the set-up in the enthusiast community as there is now with the GTX 970. Part of this is because of the way in which the partitioned RAM was discovered, and the lack of up-front disclosure from Nvidia. But perhaps more important is the full impact that the unified memory of the consoles will have on PC games development, which is still split into discrete system and video RAM partitions. Just how much memory is required to fully future-proof a GPU purchase — and how fast should it be?

But perhaps more important is the full impact that the unified memory of the consoles will have on PC games development, which is still split into discrete system and video RAM partitions. Just how much memory is required to fully future-proof a GPU purchase — and how fast should it be?

The future of graphics hardware is leaning towards the notion of removing memory bandwidth as a bottleneck by using stacked memory modules, but one highly respected developer can see things moving in another direction based on the way games are now made.

«I can totally imagine a GPU with 1GB of ultra-fast DDR6 and 10GB of ‘slow’ DDR3,» he says. «Most rendering operations are actually heavily cache dependent, and due to that, most top-tier developers nowadays try to optimise for cache access patterns… with correct access patterns, correct data preloading and swapping, you can likely stay in your L1/L2 cache all the time.»

While the unified RAM set-up of the current-gen consoles could cause headaches for PC gamers, bandwidth limitations in their APU processors are necessitating a drive for optimisation that keeps critical code running within memory directly attached to the GPU itself, making the need for masses of high-speed RAM less important. And that’s part of the reason why the Nvidia Maxwell architecture that powers GTX 970 performs so well — it’s built around a much larger L2 cache partition than its predecessor.

And that’s part of the reason why the Nvidia Maxwell architecture that powers GTX 970 performs so well — it’s built around a much larger L2 cache partition than its predecessor.

But looking towards the future, the truth is that we just can’t be entirely sure how much video RAM we’ll need on a GPU to sustain us through the current console generation, while maintaining support for all the bonus PC goodies like higher resolution textures, enhanced effects and support for higher resolutions. What has become clear is that 2GB cards are the bare minimum for 1080p gaming with equivalent quality to the eighth gen consoles, while 3GB is recommended. Today’s high-end GPUs seem to cope easily enough for now, but the game engines of the future could see the requirement increase beyond the 4GB seen in today’s top-tier cards. As for the GTX 970, could there come a time when 3.5GB of fast RAM isn’t enough? The truth is, we don’t know — but the more the current-gen consoles are pushed, the more critical the amount of memory available on your graphics card becomes.

«The harder we push the hardware and the higher quality and the higher res the assets, the more memory we’ll need and the faster we’ll want it to be,» a well-placed source in the development community tells us. «Our games currently in development are hitting memory limits on the consoles left, right and centre now — so memory optimisation is on my list pretty much constantly.»

Farthest headshot — Page 2

Hero

December 2016

Improved my score

I’m not sure if you can go beyond 500m in this game. A very hinged trajectory is obtained when shooting beyond 400m, and the bullets are almost invisible.

December 2016

— last change

December 2016

Play in Saint-Quentin, Sinai desert — very «long-range» maps (I’m talking about the capture mode, of course). Empire’s Edge and Fao Fortress are not suitable. CI — because there is a mountain in the middle of the map and the max distance will hardly reach 400. Fao — too few opportunities to find a comfortably lying or standing motionless victim at a distance of more than 300 (unless you are a pro to determine the point of impact by eye) never played.

CI — because there is a mountain in the middle of the map and the max distance will hardly reach 400. Fao — too few opportunities to find a comfortably lying or standing motionless victim at a distance of more than 300 (unless you are a pro to determine the point of impact by eye) never played.

I can only give advice — the rifle with the highest bullet speed (yes, there is such a thing in this game, but it is not indicated anywhere in the weapon specifications), this is Gever 98. I immediately noticed this, but I thought what it seemed to me, and then on one site I saw all the characteristics of the trunks and it turned out to be true.

As a «punishment» for this, Gever 98 — the slowest sniper — rate of fire 50. This only applies to rifles of the «Sniper» versions,

So, thanks to the speed of the bullet, you will have to wait less until it reaches the target

I speak as a person who prefers to play for Support and Med. And snap taking from hopelessness (when completely pressed). And in general, I didn’t play for snap for the first 3 weeks at all, then I started playing for the sake of a medal.

And in general, I didn’t play for snap for the first 3 weeks at all, then I started playing for the sake of a medal.

My longest shot is 530m. On the map of Saint-Quentin, from the British spawn, I took off the anti-aircraft gunner standing at point A. Then we were tightly clamped and the anti-aircraft gunner fired at our airship and did not pay attention to the fact that I was shooting at him. Naturally, I hit my head by accident. I killed anti-aircraft gunners there before, but in the body.

P.S. Although, I just thought, there are also places on the Edge of the Empire from which you can shoot at a great distance. If you camp in the fortress at point G or at the spawn of the Swiss, then there is one place where camping is very common — the mountain next to point C. There is a protruding ledge on which snipers like to lie. Now I specially went to an empty server and measured the distances with a rangefinder — from the far corner of the fortress (point G) to this rock, 530 meters, and from the respawn of Switzerland (there is a place on the rocks, on which, if desired, you can lie down and fire at points C and D ) — 550m

Hero (Retired)

December 2016

— last change

December 2016

@iksec@Ancorig@beetle_rus I think over a thousand headshots are possible. There are craftsmen, for example, in battlefield 4, the farthest headshot was 3076 m, and in 3 — 3225 m.

There are craftsmen, for example, in battlefield 4, the farthest headshot was 3076 m, and in 3 — 3225 m.

(there is a video on the Internet, https://www.youtube.com/watch?v=8wHcZ5SD79Q). So considering what maps are in the battlefield, especially the Sinai desert, I think more is possible.

Let’s play!!!

I am not an EA employee.

December 2016

— last change

December 2016

Well, now there are no such distances — 3000m, not even 1000m. Most cards are less than 1000 edge to edge. In Sinai, the farthest distance from which I shot was ~ 700m — from the spawn of England to the top of the mountain at point F (and I lost that duel because of stupid physics, when you can’t crawl, you get stuck in the stones). Fortress Fao — from the British Republic to the fortress 500-600 m. The edge of the empire, as I wrote above, the farthest points of fire are 550 m. The shadow of a giant came and looked — max. 500m will be typed. The most «long-range» map that I found is Saint-Quentin, where the distance from spawn-to-spawn is ~ 750m (quite realistic from anti-aircraft guns on the British spawn to anti-aircraft guns on the German shoot). But this again should be sooooo lucky, you have to be Robin Hood))). Or talk to a friend

The most «long-range» map that I found is Saint-Quentin, where the distance from spawn-to-spawn is ~ 750m (quite realistic from anti-aircraft guns on the British spawn to anti-aircraft guns on the German shoot). But this again should be sooooo lucky, you have to be Robin Hood))). Or talk to a friend

So even if you can hardly get 1000m from edge to edge on the map, this does not mean that you can shoot at these distances.

Community Manager (retired)

December 2016

Lesson for the patient. =)

As already mentioned here, it all depends on the size of the maps and the area being shot through. From part to part, all this changes, but there is always where to set a new record. Given the First World War, smaller distances are fully justified.

December 2016

I didn’t think of something before, but now I looked at the battlefieldtracker website, there is the farthest headshot — 1076m!!

I don’t even know where he could shoot from, I’m not talking about how he could hit the head at such a distance

Hero

December 2016

100 pounds staging shot)

December 2016

meters under 600 it is still possible to shoot from the field, but staged and even more

December 2016

By the way, I was able to reproduce my own shot on Saint-Quentin several times (even 3 times in one game !!!), because I found an exact landmark, always aiming at it you can hit the «anti-aircraft gunner» in the head. They, firing at the airship, behave like current capercaillie — they don’t pay attention to anything else

They, firing at the airship, behave like current capercaillie — they don’t pay attention to anything else

Hero

December 2016

19_Leo_84 written:

They, firing at the airship, behave like capercaillie currents — they don’t pay attention to anything else

And they do it right. Only egoists scoff at players who sacrifice themselves for the sake of the team. Destroying the airship is the most important task for the team.

ASUS Eee Pad Slider SL101 Tablet: Sliding Knot / 9 Tablets0001

When closed, the Eee Pad Slider looks almost like a regular tablet. The halves of the body fit very carefully, the gap between them is minimal. Therefore, if you do not know about «special abilities», then you can not guess that the device is capable of transformation.

Generally speaking, the most popular version of the Slider design is the dark one. But it came to us for testing in a light version — with a white back and a pale golden edging.

To bring the device into full combat readiness, it is necessary to slightly lift the far edge of the cover. Then the finishing mechanism is triggered, and the lid rises to the correct position by itself.

Tips in the form of neat arrows are applied on the front and side sides so that the user does not confuse which edge should be lifted. True, in the dark you can still confuse it — the arrows will not be visible, and the automatic display orientation will not allow you to understand which edge the tablet is currently deployed.

Like the previously tested Eee Pad Transformer, the «Slider» pleased with excellent mass distribution. On horizontal surfaces, it stands securely and does not show a tendency to roll back at all. The mechanism works clearly and pleasantly. But still, it is felt that the design here is noticeably less solid — the Transformer wins a lot in this regard.

Slider keyboard is not as comfortable as in Transformer. The horizontal pitch of the keys is the same, 17 mm, but vertically they are strongly compressed — up to 14 mm. In addition, almost all service buttons were noticeably affected — Enter, Shift, and so on. A number of function keys and completely disappeared.

In addition, almost all service buttons were noticeably affected — Enter, Shift, and so on. A number of function keys and completely disappeared.

The keyboard is pushed close to the edge of the case, so there is no room for the touchpad here. However, something else is worse: there was no place for the platform on which the hands lie during printing. That is, for what in English is very speaking name «palmrest». This does not add comfort when working with text.

In terms of communication capabilities, Slider is also much inferior to Transformer. There is only one USB port, there is no full-fledged SD / MMC memory card reader — there is a slot only for MicroSD.

True, in the «Slider» all the connectors are initially. Whereas in the «Transformer» both USB ports and the SD / MMC slot are available only in the docking station.

| Specifications ASUS Eee Pad Slider SL101 | |

|---|---|

| Processor | NVIDIA Tegra 250: ARM Cortex A9 MPCore, 1 GHz, dual cores |

| Graphic controller | NVIDIA GeForce ULP integrated into |

| Shield | 10. 1″, 1280×800; IPS technology; 1″, 1280×800; IPS technology; Capacitive touch screen |

| RAM | 1 GB |

| Flash memory | 16/32 GB Free annual ASUS Web Storage subscription with unlimited storage |

| Tablet interfaces | 1 x USB 2.0 1 x MicroSD 1 x Mini HDMI 1 x headphone/mic-in (3.5 mm mini-jack) 1 x 40 pin connection port |

| Wireless | Wi-Fi 802.11b/g/nBluetooth 2.1 + EDR |

| Sound | One speaker, microphone |

| Power | Li-Pol 24.4 Wh (10.8 V, 2260 mAh) Power supply 18W (5V, 2A or 15V, 1.2A) |

| Other | Physical keyboard, flip design, 1.2 MP front webcam, 5 MP rear camera, rotation sensor, ambient light sensor, GPS module |

| Size mm | 273x180x17. 3 3 |

| Weight, g | 970 |

| Operating system | Google Android 3.1 (Honeycomb) |

| Official manufacturer’s warranty | 12 months |

In terms of filling, ASUS Eee Pad Slider is an exact copy of the Eee Pad Transformer. And the physical keyboard is recognized by the operating system in the same way as in the Eee Pad Transformer — it is considered a docking station. She even has her own name — SL102.

Same NVIDIA Tegra2 platform and Android Honeycomb operating system. True, the version has grown to 3.1, but on the surface there is not much difference. The amount of memory in the tested copy of the Eee Pad Slider was 32 GB — you can increase it with a MicroSD card, flash drive or hard drive (NTFS support is available). External drives are still mounted in a separate Removable folder, only now it has moved to the “root”, so it has become even more convenient.

Since there are no additional buttons on the physical keyboard of the Eee Pad Slider, all adjustments are made by the standard means of the operating system. The only thing that was added to ASUS is the ability to take screenshots. In order to do this, you need to hold your finger on the virtual button «Recent Apps».

The set of built-in cameras is again exactly the same as in the Eee Pad Transformer: 1.2 MP on the front side of the tablet and 5 MP on the back.

Of course, the picture quality is just as mediocre.

HD video playback still has problems. NVIDIA promised that they would be fixed in the Kal-El platform — and honestly keeps its word, there are no fixes in Tegra 2 and, it seems, is not expected. For those who missed or forgot what we are talking about and how to deal with this ailment, we will quote the review of Eee Pad Transformer:

Traditionally, in reviews of devices based on NVIDIA Tegra 2, the video playback capabilities of the device are limited to a brief remark like “you can rob cows watch 1080p video and it won’t slow down. » However, we were lucky enough to stumble upon a rake related to video playback, so we are in a hurry to share the results of a small study of this aspect of the life of Terga 2 tablets.

» However, we were lucky enough to stumble upon a rake related to video playback, so we are in a hurry to share the results of a small study of this aspect of the life of Terga 2 tablets.

Briefly, this can be formulated as follows: «despite the declared (and opposed to you-know-whom) omnivorousness of Android devices, far from any HD video can be watched on a tablet.» The author of this review was especially lucky: out of his entire poor collection of video files, the ASUS tablet managed to play only one video. With the rest, it was either this:

This is how an attempt to play many not too exotic video formats can end up

Or this:

File format H.264, 720p, MKV container. Nothing particularly heavy or out of the ordinary, right?

The comment of the Russian representation of NVIDIA on this issue is as follows:

“The NVIDIA Tegra 2 platform supports the h364 1080p format with the baseline profile specifications. Accordingly, if a video is downloaded from the Internet and does not play / plays poorly, it must be converted to this format, for example, using the Badaboom program.

Accordingly, if a video is downloaded from the Internet and does not play / plays poorly, it must be converted to this format, for example, using the Badaboom program.

Highline profile support will be implemented in a new version of Tegra codenamed Kal-El. In cases with legal, protected content, this should not be a problem.”

As an example, we were asked to test the system with a correctly encoded video. We checked: there really are no problems, 1080p (H.264, MP4 container, bitrate 9896 Kbps) is played without any hint of slowdown.

In general, please note that video playback on Tegra 2 tablets may not work. Moreover, it is difficult to predict problems by the appearance of the file — you can make sure that it works only by checking the playback directly on the tablet. Therefore, to be sure, we recommend transcoding the video. Or using programs that allow you to select baseline profile , or software that can adapt video for a specific device.

Included with the tablet is an extremely light, compact and very well made power adapter with detachable plug. It connects directly to a power outlet. At the other end is a regular USB port, to which, through the bundled cable, the device itself is connected for charging.

The weight of the Eee Pad Slider is surprisingly high. The hardware keyboard — the only functional advantage of the «Slider» compared to monolithic competitors — gives a one and a half times increase in weight. As a result, the Slider weighs almost a kilogram.

Unlike the Transformer, the Eee Pad Slider’s closed display remains outside when closed. So in this case, it will not be possible to do without a cover. Included with the device is a pouch-handbag made of some nice velvety synthetics. If you add the weight of the cover — 180 grams with a little — to the weight of the tablet itself, then the weight of this kit will be 1.15 kilograms. «Transformer», which does not require the mandatory use of a cover, weighs only 150 grams more.