NVLink vs. SLI and Multiple GPUs

TABLE OF CONTENTS

1

It’s a reasonable enough assumption to make that if one new graphics card (GPU) can speed up your work by X amount, two will do the job twice as fast, right?

Well, it depends.

Adding in all the GPUs you can afford into one computer for ultimate power might sound like a great idea that should be easy to do, but like many things, there’s a little more nuance to it than just slapping together some GPUs you’ve found and calling it a day.

What is SLI and how does it work?

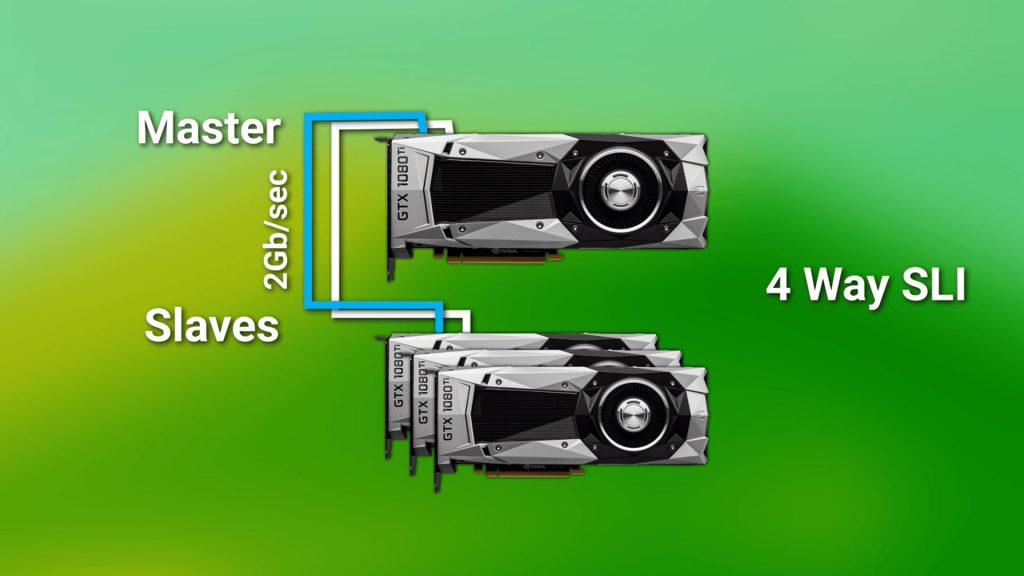

SLI—or “Scalable Link Interface” —is a technology bought and developed by NVIDIA that allows you to link together multiple similar GPUs (up to four).

Image-Source: Teckknow

It allows you to theoretically use all of the GPUs together to complete certain computational tasks even faster without having to make a whole new computer for each GPU or wait for a new generational leap of GPU hardware.

It works in a master-slave system wherein the “master” GPU (i.e. the topmost/first GPU in the computer, generally) “controls” and directs the “slaves” (i.e. the other GPU/s), who are connected using SLI bridges.

The “master” acts as a central hub to make sure that the “slaves” can communicate and accomplish whatever task is currently being performed in an efficient and stable manner.

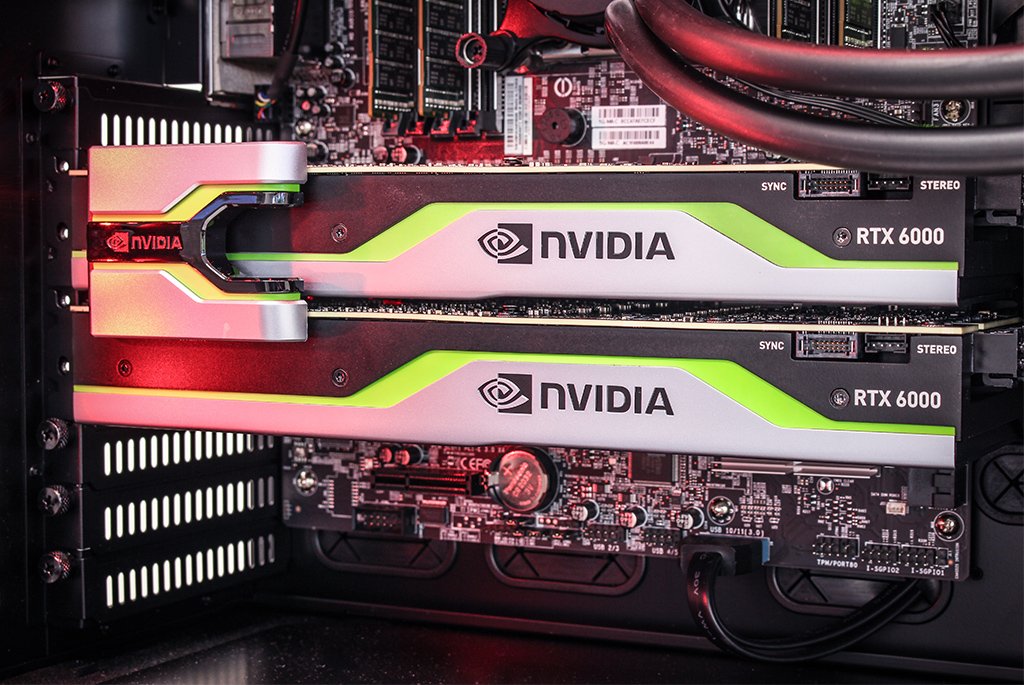

Image-Source: Nvidia

The “master” then collects all of this scattered information and combines it into something that makes sense before sending it all to the monitor to be viewed—by you.

Now, you might be wondering why we even need an SLI bridge. Can’t we simply use the PCIe slot and PCIe lanes instead of having to fiddle about with these bridges?

Well, in theory, you can actually use them, but the problem with that is when you get to the higher/newer end of things, meaning anything that’s been made in the last 15 odd years, it isn’t enough.

A PCIe lane can only hold and send so much information. Up until very recently, If you try to combine GPUs only through PCIe lanes, you’ll quickly run into massive bandwidth bottlenecks to the point where it would’ve been easier and more performant to have just used a single GPU.

So, GPU manufacturers don’t allow this. Unless we’re talking about AMD, but that’s another matter entirely.

The point being, an SLI bridge tries to fix this. It’s a way of communicating between GPUs that circumvents the PCIe lanes and instead offloads that work directly onto the bridge.

Here’s an example:

When SLI was first released in ’04, the PCIe standard at the time (PCIe 1.0a) had a throughput of 250 MB/s or 0.250 GB/s. Whereas an SLI bridge had a throughput of around 1000 MB/s or 1 GB/s.

The difference is apparent.

Image-Credit: PCI-SIG [PDF]

Is SLI worth it?

Is SLI dead? Back in the days when you needed more than one GPU to be able to play the most demanding PC Games and PCIe Bandwidths were insufficient, SLI made sense.

Nowadays, GPUs have become so powerful, that it makes more sense to buy one powerful GPU than to hassle with all of the issues SLI brings to the table. Stuttering, bad Game support, extra heat, extra power consumption, more cables in the case, and worse value for the money. You don’t get 200% performance from two GPUs linked through SLI, it’s more like 150%.

In addition: Because so few Gamers invest in an SLI setup, there is almost no enticement for Game Developers to support it.

So, yes, SLI is dead and is being phased out by Nvidia.

What is NVLink?

NVLink—in simple terms—is the bigger, badder, brother of SLI. It was originally exclusively for enterprise-grade GPUs but came to the general consumer market with the release of the RTX 2000 series of cards.

Image-Source: GPUMag

NVLink came about to fix a critical flaw of SLI, the bandwidth. SLI was fast, faster than PCIe at the time, but not fast enough, even with the high-bandwidth bridges that NVIDIA released later on. The GPUs could work together efficiently enough, yes, but that’s about all they could do with what SLI offered.

The GPUs could work together efficiently enough, yes, but that’s about all they could do with what SLI offered.

Here’s another example:

When NVLink was introduced, NVIDIA’s own high-bandwidth SLI bridge could handle up to 2000 MB/s or 2 GB/s, and the PCIe Standard at the time (PCIe 3.0) could handle 985 MB/s or 0.985 GB/s.

NVLink on the other hand? It could handle speeds of up to 300000 MB/s or 300 GB/s or more in some enterprise configurations and around 100000 MB/s or 100 GB/s in the more general consumer configurations.

Image-Source: Nvidia

The difference is even more apparent.

So with SLI, it was less that you were using multiple GPUs as one, and more that you were using multiple GPUs together and calling it one.

What’s the difference?

Latency. Yes, you can combine GPUs with SLI, but it still took a non-inconsequential amount of time for them to send information across, which isn’t ideal.

That’s where NVLink comes in to save the day.

NVLink did a good ol’ Abe Lincoln and did away with that master-slave system we talked about in favor of something called mesh networking.

Image-Source: Nvidia

In Mesh Networking every GPU is independent and can talk with the CPU and every other GPU directly.

This—along with the larger number of pins and newer signaling protocol—gives NVLink far lower latency and allows it to pool together resources like the VRAM in order to do far more complex calculations.

Image-Source: Nvidia

Something that SLI couldn’t do.

Drawbacks of NVLink

NVLink is still not a magical switch that you can turn on to gain more performance.

NVLink is great in some use cases, but not all.

Are you rendering complex scenes with millions of polygons? Or Editing videos and trying to scrub through RAW 12K footage? Simulating complex molecular structures?

These will all benefit greatly from 2 or more GPUs connected through NVLink (If the Software supports this feature!).

Image-Source: Nvidia

But trying to run Cyberpunk 2077 on Ultra at 8K? Sorry, but sadly that isn’t going to work. And if it does, it’ll work badly. At least with the current state of things.

NVLink just didn’t take off as most had hoped. Even nowadays, multi-GPU gaming setups are prone to a host of problems that make it hard to even get the games running, and if you do, you’ll most likely be plagued by micro stuttering.

Not to mention that developer support for multi-GPU setups range from “meh” to non-existent.

Is NVLINK worth it?

Productivity and enterprise use cases for the most part.

You can still run some select games on multiple GPUs, but the experience is not optimal, to say the least. You might get higher performance at the highest percentile, but the overall performance is worse than if you just had a strong, single GPU.

Do you need SLI or NVLink for GPU Rendering?

We talked about productivity, and a large part of that is Rendering. Many 3D Render Engines such as Redshift, Octane, or Blender’s Cycles utilize your GPU(s) for this.

Many 3D Render Engines such as Redshift, Octane, or Blender’s Cycles utilize your GPU(s) for this.

The great thing about GPU Rendering is, you won’t need SLI or NVLink to make use of multiple GPUs. If your GPUs are not linked in any way, the Render Engine will just upload your Scene Data to each of your GPUs and all of them will render a part of your image.

You’ll usually notice this when you count the number of Render Buckets which usually correlate to the number of GPUs in your system.

Although none of the available GPU Render Engines require GPU Linking some can make use of NVLink.

When you connect two GPUs through NVLink, their memory (VRAM) is shared and select Render Engines such as Redshift or Octane can make use of this feature.

In most scenarios, you will see no performance difference and your rendertimes will stay the same. If you work on highly complex scenes, though, that require a higher amount of GPU Memory than your individual GPUs offer, you’ll likely see some speedups when using NVLink.

In my experience of working in the 3D-Industry for the past 15 years, though, in most cases, you will not need NVLink and it’s usually a hassle to set up.

GPU Rendering NVLink Benchmark Comparison

Let’s take a look at some Benchmarks. Chaos group, the company behind the popular Render Engine V-Ray, has done some extensive testing and comparisons of rendering with and without NVLink.

Image-Source: Chaosgroup

Both, two RTX 2080 Tis, and two RTX 2080s have been tested in rendering five V-Ray Scenes of different complexity.

4 of the five scenes see a decrease in render performance with NVLink enabled. The reason is simple: Although the VRAM is pooled together, enabling NVLink requires additional resources to be managed, which has to be deducted from render performance.

The first 4 Scenes also don’t need more VRAM than any individual GPU has available.

The 5th Scene (Lavina_Lake), though, could only be rendered on 2x 2080 Tis with NVLink enabled (combined VRAM-capacity: 22GB). This is because the Scene was so complex, it needed more VRAM than a single GPU could offer – and more than 2x 2080 connected through NVLink were able to offer (combined VRAM-capacity: 16GB).

This is because the Scene was so complex, it needed more VRAM than a single GPU could offer – and more than 2x 2080 connected through NVLink were able to offer (combined VRAM-capacity: 16GB).

As you can see, NVLink is only practical in rare cases when your projects are too complex to fit into the available VRAM of your individual GPUs.

FAQ

Can I use my SLI bridge that I have to get NVLink?

Nope. NVLink is a whole new standard with a whole new connector. You’ll have to buy one to use NVLink sadly.

How many cards can I link using SLI/NVLink?

- With SLI, it’s up to four cards.

- With NVLink, for consumers, it’s two. It’s up to 16 or more even in some enterprise setups.

- Why is SLI/NVLink so bad in games?

- Mainly game implementation and micro stuttering. Both of those could potentially be fixed if given enough development time, but there’s just no great incentive to optimize something that only ~1% of people will use.

Can I connect together different types of cards with SLI/NVLink?

- When SLI was still supported, you couldn’t link together different cards. A GTX 1080, for example, has to go with another GTX 1080—the manufacturer doesn’t matter for the most part.

- With NVLink, it’s a bit more complicated. There are some consumer GPUs that support being linked with enterprise-grade GPUs, but the support is spotty at best.

- Basically, get two matching cards and you’ll be golden.

Is SLI dead?

As good as, yes.

Do you need a special motherboard for NVLink?

No. Just 2 or more GPUs that support NVLink and an NVLink bridge.

Can you use AMD and NVIDIA GPUs using NVLink?

No. But there have been attempts at using GPUs from both manufacturers together in the past.

Can the RTX 3060, 3070, 3080, 3090, etc use NVLink?

Only the RTX 3090 supports NVLink currently.

Do I have to use NVLink for multiple GPUs?

- Not really. For productivity purposes such as rendering, video editing, and other GPU bound applications like that, you can use two GPUs independently without having them pool their resources and work together. (E.g. GPU rendering in Redshift or Octane works great without NVLink)

- Matter of fact, this type of setup is quite beneficial for some special circumstances where, for example, you might want to have one dedicated GPU set aside to render something while you use the other GPU to do other work instead of having to give away control of your PC to the rendering gods while it did its thing.

What should I keep in mind if I decide to get another GPU?

- You should make sure that your motherboard has enough PCIe slots and that those slots have sufficient PCIe bandwidth to not bottleneck your additional GPU.

- If you have anything other than an ultra-cheap, ultra-small motherboard, you should be fine.

Still, doesn’t hurt to check.

Still, doesn’t hurt to check. - You should make sure that you have a strong enough power supply that can actually support both GPUs.

- You should make sure that your case even has enough space for another GPU. They’re getting real big these days.

- And finally, you should make sure to buy an NVLink bridge with your second GPU as well.

That’s it from me. What are you using NVLink or SLI for? Let me know in the comments!

CGDirector is Reader-supported. When you buy through our links, we may earn an affiliate commission.

What Is NVLink And How Does It Differ From SLI? [Simple Guide]

If you’re wondering what happened to SLI and the idea of connecting multiple GPUs for a better performance, we have the answer — NVLink.

By Branko Gapo

The idea of connecting multiple graphics cards isn’t a new one. On the contrary, it has existed since at least the late 1990s.

On the contrary, it has existed since at least the late 1990s.

Although this concept sounds cool on paper, SLI never really took off as anticipated. However, NVIDIA wasn’t prepared to back down, so they created NVLink as a direct successor.

Keep reading if you want to discover how NVLink differs from its predecessor and whether or not it has finally managed to fulfill the ambition of two graphics cards operating as one.

So, what is NVLink?

If you want the technical definition, NVLink is a wire-based communications protocol serial multi-lane near-range communication link. In simple terms, it’s a way of utilizing two graphics cards as one for a large boost in performance.

Table of ContentsShow

The Difference Between NVLink And SLI Has Huge Potential

Unlike SLI, NVLink uses mesh networking, a local network topology in which the infrastructure nodes connect directly in a non-hierarchical way.

This enables each node to relay information instead of routing everything through a particular node. What’s useful about this setup is that nodes dynamically self-organize and self-configure, which allows dynamic distribution of the workload.

Essentially, where SLI struggled is where NVLink shines the brightest, and that is the speed at which data is exchanged.

NVLink doesn’t bother with SLI’s master-slave hierarchy, where one card in the setup works as the master and is responsible for gathering slaves’ data and compiling the final output. By using the mesh networking infrastructure, it can treat each node equally and thus significantly improve rendering speed.

The biggest advantage of NVLink, in comparison to SLI, is that, because of the mesh network, both graphics cards’ memories are constantly accessible.

This was a point of confusion for those unfamiliar with SLI’s multi-GPU setup. It was logical that if two GPUs each have a gigabyte of RAM, their combined memory would be two gigabytes. However, that simply wasn’t the case in practice. With NVLink, it’s finally safe to say that one plus one equals two.

However, that simply wasn’t the case in practice. With NVLink, it’s finally safe to say that one plus one equals two.

To handle frame rendering, SLI used Alternate Frame Rendering (or AFR for short), which meant that each connected card handled different frames.

In a two GPU setup, one card would render even frames while the other rendered odd frames. While this was a logical solution, it was not executed in the best way (mostly due to hardware limitations) and caused a lot of frustrating micro stuttering.

Another key to faster image processing is the NVLink Bridge. SLI bridges had a 2 GB/s bandwidth at best, but the NVLink Bridge promises a ridiculous 200 GB/s in the most extreme cases. However, as you might have expected from such a huge number, it can be deceiving.

The 160 and 200 GB/s NVLink bridges can only be used for NVIDIA’s professional-grade GPUs, the Quadro GP100 and GV100, respectively. While this means there is technically a machine out there with those bandwidth speeds, they are designed for tasks such as AI testing or CGI rendering.

While this means there is technically a machine out there with those bandwidth speeds, they are designed for tasks such as AI testing or CGI rendering.

The bridges for consumer-focused GPUs are slower but still a vast improvement over SLI. Top-tier enthusiasts who acquire two Titan RTXs or two RTX 2080 Ti’s can potentially experience a whopping 100 GB/s bandwidth.

Is This Finally The Way A Multi-GPU System Becomes The Standard?

Sadly, it appears that we are still far from that. However, NVLink has the potential to introduce change with its “easier than ever” way for game developers to utilize everything that a multi-GPU setup has to offer fully.

Ironically, older games might produce fewer FPS with NVLink than with a single GPU. There are only a handful of modern games that can actually provide the 2-as-1 GPU experience.

This isn’t to discourage anyone, but it’s a simple fact that, although the potential is there, it’s currently not worth getting two GPUs and connecting them with an NVLink. The same applies to its predecessor, SLI.

The same applies to its predecessor, SLI.

That isn’t to say that this tech will never be viable, but the same problems that plagued SLI are currently holding back NVLink.

With the arrival of a new generation of GPUs from both NVIDIA and AMD, it will be intriguing to see if, and to what extent, we will see support for multi-GPU systems.

As of 2022, only NVIDIA’s enthusiast-grade RTX 3090 GPU has multi-GPU support built-in. On AMD’s side, it appears that implementation has also died out.

What Does FPS Mean In Gaming?

Related Topics

Tags

- Nvidia

|

3DNews Technologies and IT market. Video card news GeForce RTX 40-series video cards have been deprived of… The most interesting in the reviews

21. Nikolai Khizhnyak NVIDIA CEO Jensen Huang, after yesterday’s presentation of the GeForce RTX 40-series video cards based on the Ada Lovelace architecture, said that these new products lack the NVLink interface. Thus, getting several new generation video cards to work together in a gaming PC will not work. nine0007 Image Source: NVIDIA According to Huang, the company ditched the NVLink interface and decided to use the Ada Lovelace generation’s transistor budget « for something else «. In particular, the company’s engineers were tasked with using the largest possible area of the processor chip for the blocks responsible for the computing power associated with the operations of AI and machine learning algorithms. Note that NVIDIA has long ago taken the path of abandoning the use of bundles of several consumer video cards. With the debut of the GeForce RTX 20-series video cards, the company abandoned SLI, replacing it with NVLink, but only in older video cards, starting from the RTX 2080.

The company also confirmed that GeForce RTX 40 series graphics cards do not support PCIe 5.0. «Ada does not support PCIe Gen 5, but a PCIe 5.0 power cable adapter is included» , an NVIDIA spokesperson told TechPowerUp. Recall that the GeForce RTX 40 series graphics cards use the new 12+4-pin 12VHPWR power connector that complies with PCIe 5.0 and ATX 3.0 standards. “The current PCIe 4.0 interface has sufficient bandwidth, so we decided that using PCIe 5.0 for the new generation of accelerators is not necessary. In addition, the large video buffer and large L2 cache in Ada graphics chips reduce the load on the PCIe interface, NVIDIA added. Source:

If you notice an error, select it with the mouse and press CTRL+ENTER. nine0007 Related materials Permanent URL: Headings: Tags: ← В |

Why is the NVIDIA RTX 3090 so «COOL» in SLI mode? Test in the game of two top RTX 3090 video cards in an unusual format | ProGamer

is a cool thing, but there are nuances.

NVIDIA’s SLI technology, which allows you to use more than one graphics card to boost your FPS in games, is finally getting support from game developers.

@ProGamer SLI mode

However, not everything is so «sweet» and «smooth».

ProGamer is with you, and today we will tell you, friends, about the advantages and disadvantages of the top RTX 309 video card0 from NVIDIA in unusual SLI mode.

So, the last nail in the coffin of SLI technology was, naturally, driven by NVIDIA itself.

This blog post on the NVIDIA site states that as of January 2021 they will no longer develop SLI profiles for future cards in general and for their past video cards.

The image is taken from open Internet sources

Based on this, we assumed that the new NVIDIA RTX 3090 graphics card also lacks support for this mode. nine0007 @ProGamer NVIDIA RTX 3090 graphics card

nine0007 @ProGamer NVIDIA RTX 3090 graphics card

Curiously, however, the RTX 3090 is NVIDIA’s latest top-end graphics card, and it actually has an NVLink connector , which, as of today, is the connector used for SLI mode, and which, strange as it may seem, it was clearly not disabled.

there wasn’t even a plug on the video card, which is really very mysterious0 in the unusual SLI mode and test this format in the game .

So we start with two Asus ROG Strix Gaming RTX 3090 . We don’t really know much about this card other than that it’s Asus.

She is, of course, a «heavy guy».

So we have a configuration of three counter-flow fans.

So, the central one rotates clockwise, the rest rotate counterclockwise.

multi-thread rotation scheme, probably for better cooling of the video chip

In general, something like turbulence.

We deliberately leave our computer open for better airflow and to prevent overheating of the processor and video cards .

Fun fact about our system configuration.

Here we have the MSI Z490 GODLIKE was literally the only motherboard in our studio that could handle this unusual graphics card configuration.

This four-slot motherboard is great for having some airflow for the top video card. nine0007

And trust us guys, if you’re running two RTX 3090s, you’ll need the fastest gaming CPU, which, at least for now, is the Core i9 10900K.

@ProGamer …. we certainly don’t mind Ryzen, but intel will be faster…

So, this is crazy, it seems to be ready.

Cool, the entire lower part of the case is filled with graphics.

I like that these fans will blow straight into the bottom card.

This is a good cooling configuration in our opinion. nine0007

So, there was a little problem.

We have four 8-pin connectors, and that’s not enough today.

Each RTX 3090 graphics card requires three eight-pin PCI express connectors.

Okay, we got the cables, but what about the power supply.

We have Seasonic for 1000 watts. Do you think it will be enough? I doubt that it will pull the system, let’s try.

So what do we have here, NVLink bridge from NVIDIA for 30 series graphics cards.

Judging by the bridge connectors, the throughput should be increased.

@proGamer bridge NVLink

So wait, at what point did NVLink replace SLI? They seem to have turned off support. Here are the tricks.

The image is taken from open sources on the Internet

So, guys, NVLink is just the name of this communication protocol, which turns out to be much faster than the original SLI.

The SLI mode itself has been, and still is, a brand name for several graphics cards. nine0072

The reason NVLink was developed was not related to games.

This was actually designed for data center products, artificial intelligence and high speed internet.

So probably the NVLink mode should be much faster than the SLI interface, and it might even let multiple graphics cards share their memory and work on much larger jobs.

Okay, please tell me it will just boot up now. nine0007

Wow. Cool!

Excellent.

Now that we’re done with that, let’s take a look at the wide range of pre-built SLI games we can enjoy.

So what do we have, Shadow of the Tomb Raider, Civilization VI, Sniper Elite 4, Gears of War 4, Ashes of the Singularity: Escalation, Strange Brigade, Rise of the Tomb Raider», «Zombie Army 4: Dead War», «Hitman», «Deus Ex: Mankind Divided», «Battlefield 1», «Halo Wars 2».

Oh, those are the same Direct X 12.

Damn it. Cool. Almost every game is supported in SLI mode.

Damn! Everything went out! Did you guys know this was going to happen?

There was some strange flickering and everything turned off.

Perhaps I should have foreseen this. Power supply, dude!

Power supply, dude!

It should be noted that while 1000W is probably enough to actually power these graphics cards, however, under sustained load, the power supply may experience sudden surges of current. nine0007

And all this can, in fact, turn on the overcurrent protection of the power supply, although, in theory and on paper, it should be able to handle this load.

Like this, for years NVIDIA and AMD have both been «rubbing» us about recommended power supplies like: «…it’s ok guys, you can use even less power and it’ll work…».

In short, friends, I would really say that you should probably go 20% -30% above their PSU wattage recommendations so you never experience anything like it.

So, we change the power supply to the Corsair AX 1600i , now it will definitely stand it.

For the test, we take the game «Shadow of the Tomb Raider» in 4K and at the highest settings.

Looks damn good.

What’s even more amazing to us is the use of these GPUs.

Both are over 80% used!

great result

This is some kind of «cool» madness. nine0007

Even at our lowest DPS, we don’t see anything lower than 50-55 fps.

While not entirely smooth, sometimes it feels like you only have one GPU. It’s a bit strange.

Now, to measure a little more objectively, we’ll let the framerate run for 180 seconds while the «Shadow of the Tomb Raider» benchmark runs its course.

While everything is being tested we will check our maximum power consumption. nine0007

Because the system in this mode works at 90 and 100% and, in addition to video cards, powers the motherboard, processor, fans.

I feel that the electricity bill will come «not sickly»

So it is, I foresaw that 1000 watts would not be enough.

Remember that the power supply is not exactly 100% efficient, even if it is 80 plus platinum or titanium. Therefore, always take with a margin of 20-30%.

So, what’s with the numbers, I already see something unreal.

09.2022 [21:41],

09.2022 [21:41], And in the last generation, NVLink was only in the flagship GeForce RTX 3090 and RTX 3090 Ti.

And in the last generation, NVLink was only in the flagship GeForce RTX 3090 and RTX 3090 Ti.  0

0