A security flaw leads Intel to disable DirectX 12 on its 4th Gen CPUs

Skip to main content

When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

(Image credit: Intel)

Audio player loading…

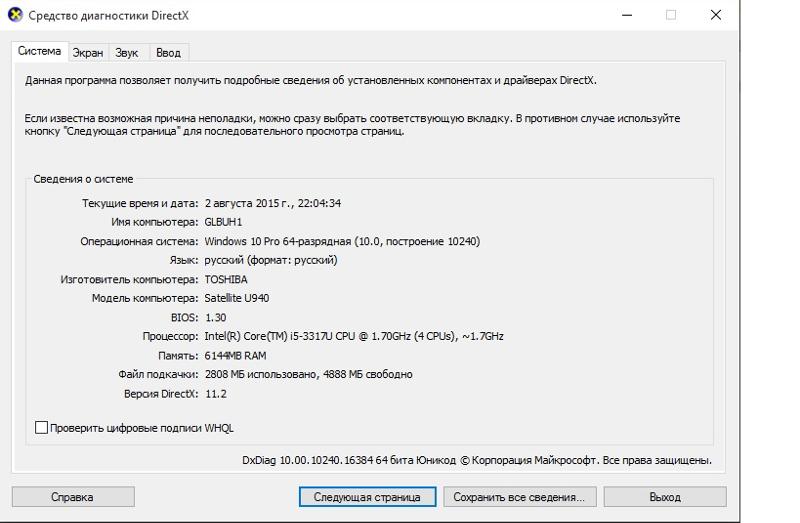

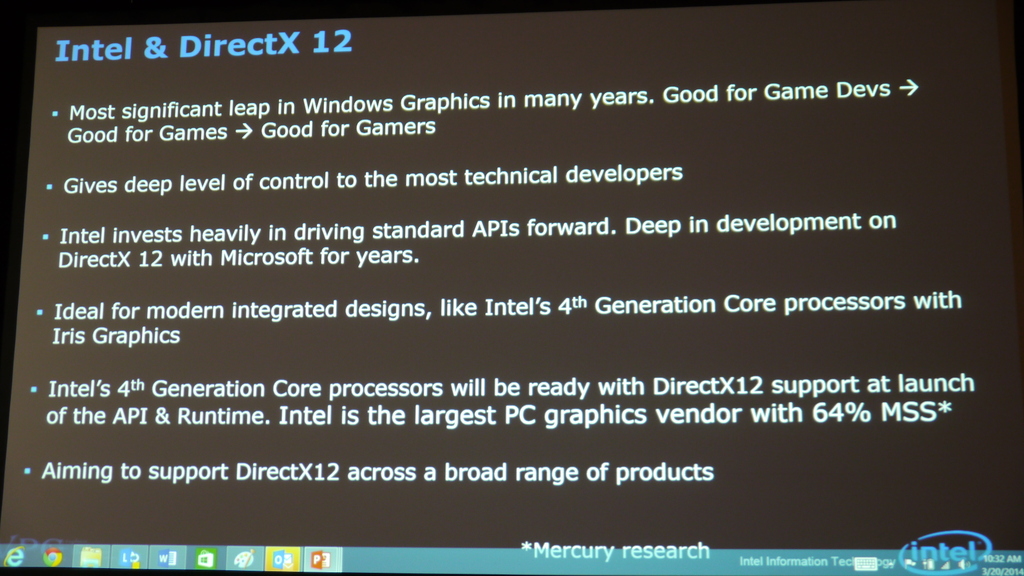

Intel has disabled DirectX 12 support on its 4th generation Haswell processors with onboard graphics due to a security vulnerability . The vulnerability may allow escalation of privilege, or in other words, allow an unauthorized user to perform unwanted actions.

It would seem to be a rather drastic action to disable DirectX 12 support completely, rather than issue an updated driver or patch, so it will be interesting to see if Intel makes further comment on the specifics of the vulnerability, or whether it has the potential to be exploited on other CPU generations.

Starting with driver 15.40.44.5107, the disabled CPUs are those with Iris Pro 5200, Iris 5100, HD 5000, 4600, 4400 and 4200 graphics, along with 4th Generation Pentium and Celeron models. Furthermore, according to Tom’s Hardware, 4th Generation Ivy Bridge processors with the same Gen 7 GPU architecture are seemingly unaffected, at least for now.

Though most enthusiast gamers have moved on from Haswell processors, there are no doubt many 4000 series CPUs chugging along in desktops and particularly laptops worldwide. Users that do use the HD and Iris graphics of the time are not too likely to be running much DX12 content anyway so this may turn out be something of a nothing burger.

Black Friday deals

Black Friday 2021 deals: the place to go for the all the best early Black Friday bargains.

Still, it’s a bit of a black eye for Intel which has received some bad PR in recent years over security issues, notably the Spectre and Meltdown vulnerabilities.

In the meantime, Intel recommends that users downgrade the driver to version 15.40.42.5063 or older in order to run Direct X 12 content, and if Intel is willing to suggest that, maybe the problem isn’t all that serious at all.

Sign up to get the best content of the week, and great gaming deals, as picked by the editors.

Contact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsors

Chris’ gaming experiences go back to the mid-nineties when he conned his parents into buying an ‘educational PC’ that was conveniently overpowered to play Doom and Tie Fighter. He developed a love of extreme overclocking that destroyed his savings despite the cheaper hardware on offer via his job at a PC store. To afford more LN2 he began moonlighting as a reviewer for VR-Zone before jumping the fence to work for MSI Australia. Since then, he’s gone back to journalism, enthusiastically reviewing the latest and greatest components for PC & Tech Authority, PC Powerplay and currently Australian Personal Computer magazine and PC Gamer. Chris still puts far too many hours into Borderlands 3, always striving to become a more efficient killer.

PC Gamer is part of Future plc, an international media group and leading digital publisher. Visit our corporate site .

Visit our corporate site .

©

Future Publishing Limited Quay House, The Ambury,

Bath

BA1 1UA. All rights reserved. England and Wales company registration number 2008885.

Intel Arc GPUs use DirectX 9 to DirectX 12 emulator, no native DX9 API

Video Cards & GPUs

Intel Xe and Arc GPUs will use DX12 to emulate DX9, with Arc GPUs featuring no native DX9 support whatsoever, Microsoft’s D3D90n12 interface is used.

Intel has officially dropped native DirectX 9 hardware support for its integrated Xe GPUs on its 12th Gen Core «Alder Lake» CPUs, as well as its upcoming Arc A-series desktop GPUs.

In its place, Intel will be using a DX9 to DX12 emulator called «D3D9On12» from Microsoft, where it will send 3D DirectX 9 graphics commands to the D3D9On12 layer, instead of directly to the D3D9 graphics driver. As soon as the D3D9On12 layer gets the command from the D3D9 API, it will convert all of the commands into D3D12 API calls.

D3D9On12 is pretty much a GPU driver on its own, instead of a GPU driver with DX9 support from Intel. Microsoft says that the performance out of its DX9 to DX12 emulator should be nearly, if not as good as native DX9 hardware support. I don’t see how native DX9 hardware support inside of Intel Xe and Arc GPUs isn’t here, but DX9-based games should be able to run even on the mid-range first-gen Arc A-series GPUs.

Microsoft says that the performance out of its DX9 to DX12 emulator should be nearly, if not as good as native DX9 hardware support. I don’t see how native DX9 hardware support inside of Intel Xe and Arc GPUs isn’t here, but DX9-based games should be able to run even on the mid-range first-gen Arc A-series GPUs.

- Read more: Intel Arc desktop GPU is so bad, it could be CANCELLED altogether

Intel will from here on out focus on optimizing its Arc GPU drivers for DirectX 11 and DirectX 12, while native DirectX 9 support will remain for Intel’s GPU architectures before the Xe GPU. Intel explains about the DirectX 9 to DirectX 12 emulation on its 12th Gen Core «Alder Lake» CPUs and their integrated GPUs, as well as their Arc discrete GPUs, below:

Does my system with Intel Graphics support DX9?

12th generation Intel processor’s integrated GPU and Arc discrete GPU no longer support D3D9 natively. Applications and games based on DirectX 9 can still work through Microsoft* D3D9On12 interface.

The integrated GPU on 11th generation and older Intel processors supports DX9 natively, but they can be combined with Arc graphics cards. If so, rendering is likely to be handled by the card and not the iGPU (unless the card is disabled). Thus, the system will be using DX9On12 instead of DX9.

Since DirectX is property of and is sustained by Microsoft, troubleshooting of DX9 apps and games issues require promoting any findings to Microsoft Support so they can include the proper fixes in their next update of the operating system and the DirectX APIs.

Intel Core i9-12900K Desktop Processor (Intel Core i9-12900K)

| Today | Yesterday | 7 days ago | 30 days ago | ||

|---|---|---|---|---|---|

|

$412.99 |

$412.99 | $416.53 | $409.99 |

Buy |

|

|

* Prices last scanned on 2/7/2023 at 8:55 pm CST — prices may not be accurate, click links above for the latest price. |

|||||

NEWS SOURCES:videocardz.com, tomshardware.com

Anthony Garreffa

Anthony joined the TweakTown team in 2010 and has since reviewed 100s of graphics cards. Anthony is a long time PC enthusiast with a passion of hate for games built around consoles. FPS gaming since the pre-Quake days, where you were insulted if you used a mouse to aim, he has been addicted to gaming and hardware ever since. Working in IT retail for 10 years gave him great experience with custom-built PCs. His addiction to GPU tech is unwavering.

Newsletter Subscription

Similar News

-

Intel details its new Arc A-Series Mobile GPUs: Arc 3, Arc 5, Arc 7

-

This leaked internal roadmap from Intel shows Arc desktop GPU disaster

-

Intel details its fleet of Arc A-Series desktop GPUs, led by Arc A770

-

Intel Arc GPU pricing: Arc A770 costs $329 to $349, Arc A750 is $289

-

Intel Arc GPU story goes live, global launch ‘later this year’

-

Intel Arc A770 Limited Edition GPU: cherry-picked reviews are now live

Related Tags

- Intel

- Microsoft

- Intel Arc

- Intel Arc GPU

- Intel Arc no DX9

- DX9 to DX12

- DX9 emulator

Measurements of the Intel Arc A750 video card in games with API DirectX 12 and Vulkan according to the version of Intel itself

08/23/22

Intel published the test results of the pre-top model Arc A750 Limited Edition in almost fifty games based on the DirectX 12 and Vulkan API . In these graphics APIs, Intel Arc graphics cards are known to perform best.

In these graphics APIs, Intel Arc graphics cards are known to perform best.

Performance measurements were taken at 1080p with ultra graphics quality settings, as well as 1440p at high settings. The basis of the test stand was the Intel Core i9 processor-12900K, and the GeForce RTX 3060 modified by EVGA XC Gaming was chosen as a direct competitor to the Arc A750. We add that this is one of the most overclocked versions of the GeForce RTX 3060 on the market.

Intel is still in no hurry to reveal the detailed specifications of the Arc A750, but it is known from unofficial sources that it is based on the ACM-G10 GPU in a configuration with 28 Xe-cores (3072 shader processors) and a 256-bit memory bus. The video buffer is represented by eight gigabytes of GDDR6.

On average, according to Intel benchmarks, the Arc A750 Limited Edition video card turned out to be 3-5% faster than the RTX 3060. However, it is reliably known that the weak point of all Arc A-series cards are games on old APIs, like DirectX 9.

Intel promises to base its pricing on the performance of Arc Alchemist graphics cards in unoptimized and older games, allowing it to «destroy everyone by price-to-performance ratio» in today’s AAA titles. The older Intel Arc A-series video cards should go on sale during this quarter, that is, before the end of September.

Read also

Stats & Tests

02/06/23

218 0

Why is Intel moving away from the Big Liitle architecture? Let’s try it out on the power circuit in the back of the laptop ASUS Zenbook 14 OLED

18.00

Huawei headphones are available in Ukraine

Huawei FreeBuds 5i have a similar front-facing look and take away the full active noise dampening system.

02/08/23 | 16.00

WD Western Digital hard disk

The characteristic feature of the Ultrastar DC HS760 disk is the use of two blocks of magnetic heads.

02/08/23 | 18.00

08.02.23 | 16.00

08.02.23 | 11.00

08.02.23 | 07.00

07.02.23 | 17.00

07.02.23 | 15.00

07.02.23 | 12.00

02/07/23 | 10.00

07.02.23 | 07.00

06.02.23 | 19.00

06.02.23 | 18.00

06.02.23 | 16.00

06.02.23 | 11.00

06.02.23 | 07.00

05.02.23 | 14.00

DirectX 12: Incredible performance boost

DirectX 12 dramatically improves game performance on Windows 10. Microsoft expects DX12 to more than double the frame rate of DX11. But according to the new Futuremark API Overhead Feature test, this estimate seems too conservative.

Not long ago, Microsoft and Futuremark published the latest results from the 3DMark test, which allows you to evaluate how the Microsoft Windows 10 gaming API has become more effective than its predecessor. But before we set off on this journey, and the advertising train departs the station with joyful beeps, let me remind you that this is a purely theoretical test that has nothing to do with real game engines.

Our test computer was equipped with an Intel Core i7-4770K processor, an ASUS Z87 Deluxe/Dual motherboard, 16GB DDR3/1600 RAM, and a 240GB Corsair Neutron SSD. Gigabyte WindForce Radeon R9 models were used as a graphics card290X and GeForce GTX Titan X. On the ASUS board, I disabled the Core enhancement feature that overclocks the processor. As recommended by Microsoft, all tests were performed at a resolution of 1280×720 pixels.

3DMark and Microsoft emphasize that the new benchmark is not a GPU comparison tool at all, but merely offers an easy way to evaluate performance and API efficiency on the same PC. Do not use it to compare PC X to PC Y or to test graphics cards. This is only a comparison of DX11 and DX12 within the selected hardware configuration.

During testing, commands are sent to the GPU to draw something on the screen. This instruction is passed through an API, which is DX11, DX12, or AMD Mantle. The less efficient the API is in managing these requests from the CPU to the GPU, the fewer objects will appear on the screen. 3DMark rapidly ramps up the number of requests and objects until the frame rate per second drops below 30 fps.

3DMark rapidly ramps up the number of requests and objects until the frame rate per second drops below 30 fps.

The first test is a comparison of DirectX 11 performance in single-threaded and multi-threaded mode. The AMD Mantle API was then tested (if supported by the hardware) and finally DirectX 12 performance was evaluated.

As you can see in the diagram, DirectX11 single-threaded and multi-threaded performance leaves much to be desired, and with the Gigabyte WindForce Radeon R9 290X video card installed, it barely reaches 900k requests before the frame rate drops below 30 fps. When using AMD Mantle, the number of accesses already increases to 12.4 million requests per second.

It’s hard to say which API was developed first (I’ve heard that DirectX 12 has been in development for several years, and other reports suggest that AMD has the lead), but the test at least reveals the potential of both APIs. And here DirectX12 proved to be somewhat more efficient — the number of requests exceeded 13. 4 million.

4 million.

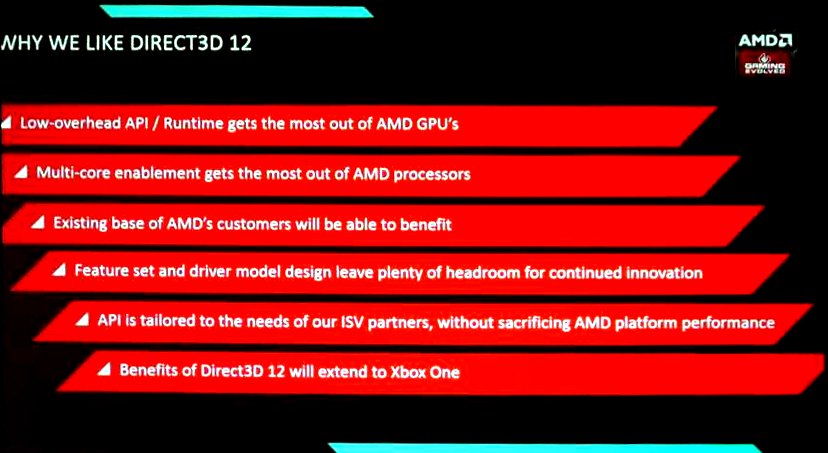

The debate over Mantle and DirectX can be considered over anyway, as long as AMD itself encourages developers to use DirectX 12 or Vulkan, the successor to OpenGL, focused primarily on games.

Remember when I noted that Futuremark does not recommend this test for graphics cards? Nevertheless, it was interesting to find out how it behaves on NVIDIA hardware, so the same thing was done again after replacing the R9 board290X on GeForce GTX Titan X. Naturally, Mantle does not work in this case anymore — the corresponding interface is supported only by AMD hardware.

It is also interesting to evaluate the effectiveness of DX12 when using integrated graphics processors. Since most «gamers» use integrated Intel graphics more than discrete AMD and NVIDIA cards, this question is quite natural, but let’s be fair: playing on integrated graphics is no longer a game. Therefore, I did not see much point in testing integrated Intel graphics components.

Meanwhile, Microsoft has published its own test results on a machine equipped with a Core i7-4770R Crystal Well processor with Intel Iris Pro 5200 integrated graphics. This quad-core chip is used in high-end all-in-ones and the Gigabyte Brix Pro computer. It has the highest performance of all currently existing Intel processors, so the results are probably worthy of attention.

All in all, it looked pretty good, apart from comparisons with previous charts from AMD and NVIDIA.

But remember that DirectX is more efficient and performs better on multi-core processors. In this case, we are not talking about graphics cards, but about ensuring that the central processor does not become a bottleneck for the graphics card.

With all that said, we decided to test the impact that the CPU has on the results by varying the number of cores, processor threads, and clock speeds in different configurations. I disabled two cores on the quad-core Core i7-4770K, enabled and disabled Hyper-Threading mode, and limited the processor clock speed.

The Core i7-4770K performs best in its default configuration, with four cores and Hyper-Threading enabled. All tests were performed on a GeForce GTX Titan X board. I only compared DirectX12 performance, since that’s what we were interested in.

What is the best processor for DirectX 12?

I also tried varying the clock speed, number of cores, and Hyper-Threading mode on different Intel processors. This is important for choosing components when buying or assembling a computer yourself.

Please note that the results presented here only simulate actual performance. The Core i7-4770K processor has 8 MB of cache, while the Celeron has only 2 MB and the Core i5 has 6 MB. In most tests, cache size did not have a noticeable effect on performance. However, I specifically had to lower the clock speed to simulate the performance of the Core i5-4670K. Its minimum value was 3.6 GHz, which is 200 MHz higher than the nominal frequency of this chip.

Performance factors

Based on our simulations, we can conclude that for DirectX 12 performance, the number of threads is more important than clock speed.

Take a look at the simulation results of a dual-core Core i3-4330 running at 3.5 GHz without using Turbo-Boost and Hyper-Threading. Compare them with the results of a cheaper dual-core Pentium G3258 overclocked to 4.8 GHz. Designed for gamers on a budget, the Pentium K chip is the only non-blocking dual-core chip in the Intel family that is offered for pennies. It accelerates very simply, and if desired, anyone can easily reach the milestone of 4.8 GHz.

But look at the test results. Despite clocking 1.3 GHz faster than the simulated Core i3, the Pentium G3258 only performs marginally better when querying DirectX 12. There is nothing surprising in this. Back in testing the second generation of Core processors — Sandy Bridge — in 2011, I found that when performing multi-threaded tasks, a Core i5 chip with a clock speed of 1 GHz showed about the same performance as a Core i7 with Hyper-Threading enabled .

The performance of the DX12 increases noticeably with the increase in the number of cores. Again, compare the results of the dual-core 4-thread Core i3-4330 and the quad-core 8-thread Core i7-4770K. They differ by almost 2 times.

Unfortunately, the octa-core Core i7-5960X processor was busy in other tests, and I didn’t get to test it with the new 3DMark test set. But I’m quite sure that if you install an eight-core processor that supports Hyper-Threading, the performance increase will be very significant.

And, of course, we cannot ignore the best AMD processors of the FX family. As a rule, at an equal clock frequency, AMD chips are inferior to Intel products. The quad-core Haswell easily outperforms the octa-core FX chip in most performance tests. But when performing multi-threaded tasks, the FX processor, despite the cache memory shared by the cores, noticeably approaches Haswell, and in some cases bypasses it. For DirectX 12, this is very significant, since the eight-core FX processor is offered for only $153.

We may earn an affiliate commission.

We may earn an affiliate commission.